How We Taught AI to See What We See, And Then Some

We gave our AI eyes, then taught it taste. With 512 dimensions and real-world feedback, it’s picking winners and getting sharper every day.

We gave our AI eyes, then taught it taste. With 512 dimensions and real-world feedback, it’s picking winners and getting sharper every day.

Our 1,500-hour time saver just got a major intelligence upgrade , and it’s now part of a completely automated, end-to-end business.

A few years ago, this work was entirely manual. Teams would scour multiple sources every day, pulling images one by one, sorting them, and deciding what to use. That didn’t scale, so we built custom scraping bots. They’ve been quietly running in production for years, gathering and organizing content at a pace no human could match. But that’s a story for another time.

Fast forward to today: the manual review step is gone, too.

Last month, we shared how we helped a client eliminate over 1,500 hours of tedious work through automation: replacing a daily image review process with an AI-powered system that outperformed the human it freed up. Missed it? Catch up here.

That alone was a big win. But we weren’t done.

This client reviews over a thousand images every single day and needs to identify the best ones for public-facing use. It’s a job that used to rely on instinct, intuition, and a very tired set of human eyes. Our initial AI sped things up tremendously, but we saw edge cases where it struggled to pick up on subtle visual traits that humans naturally recognized.

So, we gave it a vision upgrade.

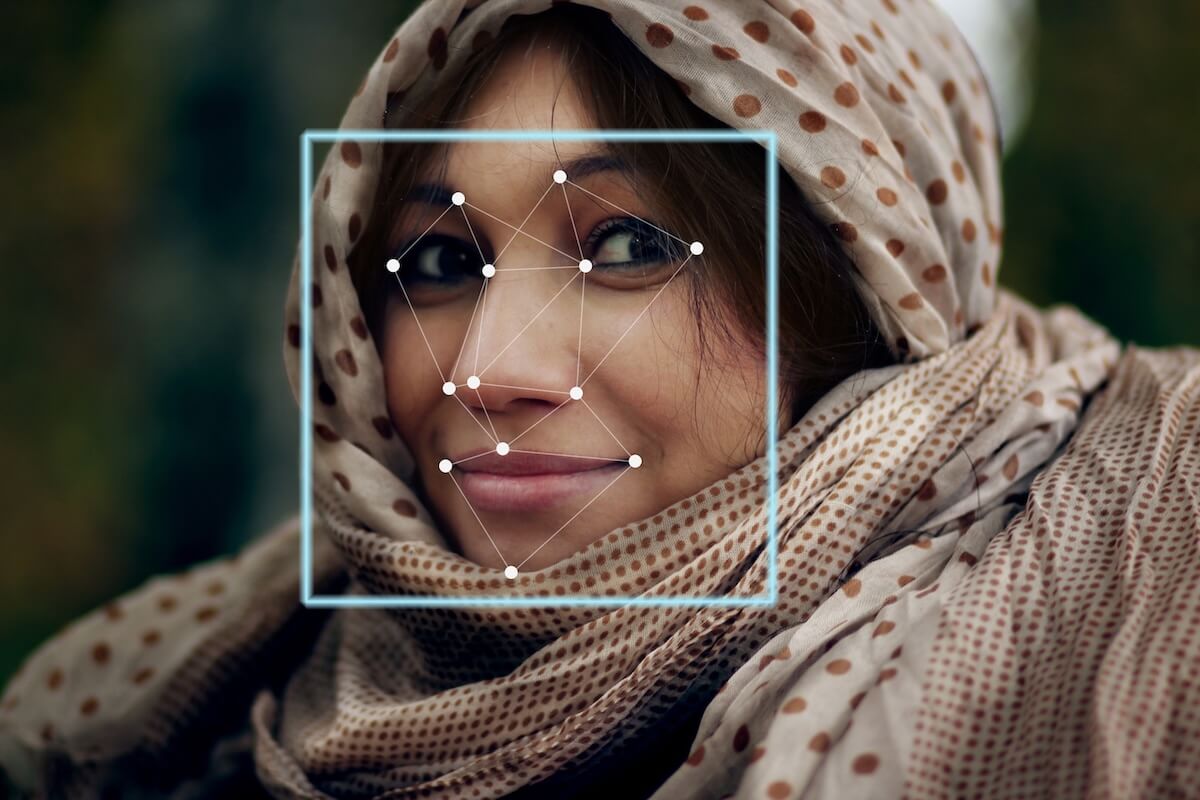

Using a technique called CLIP image embeddings, we essentially gave the AI a new language for understanding images, breaking each photo into 512 distinct points of comparison. Think of it as a detailed fingerprint, unique to each image. We trained the system on thousands of past “great” images, teaching it exactly what “good” means in this context.

Embeddings also make the system more efficient and cost-effective. Once generated, they can be stored and processed locally, removing the need for repeated (and expensive!) API calls to external AI models. This local approach also keeps all the image data private, ensuring it never leaves our environment. That means we’re not subject to an external AI’s content filters, which sometimes (rightly or wrongly) refuse to process certain images or misinterpret their context. With embeddings, we maintain control, bypassing those unpredictable model “morals” while staying fully compliant with the law and client requirements.

You could say we added another dimension… or 512 of them.

Intergalactic precision, now in production.

The result? A smarter system that doesn’t just guess what looks right, it knows. And now, it’s outperforming even the best human reviewer with unprecedented accuracy.

Better yet, it’s learning all on its own.

Every image selected is posted to high-reach, monetized social channels with over 320 posts per day. We track the revenue from each image, then feed that back into the system. The AI isn’t just learning from human preference, it’s learning from what actually performs. That feedback loop means it selects, learns, adapts, and optimizes without additional human input. The more it runs, the better it gets.

This isn’t just an AI feature. It’s an autonomous content engine.

From sourcing to selection to publishing, it’s entirely automated, and constantly improving.

What’s next?

We’re refining the loop, exploring how visual trends shift over time, and adapting dynamically. This system isn’t just supporting growth, it’s driving it.